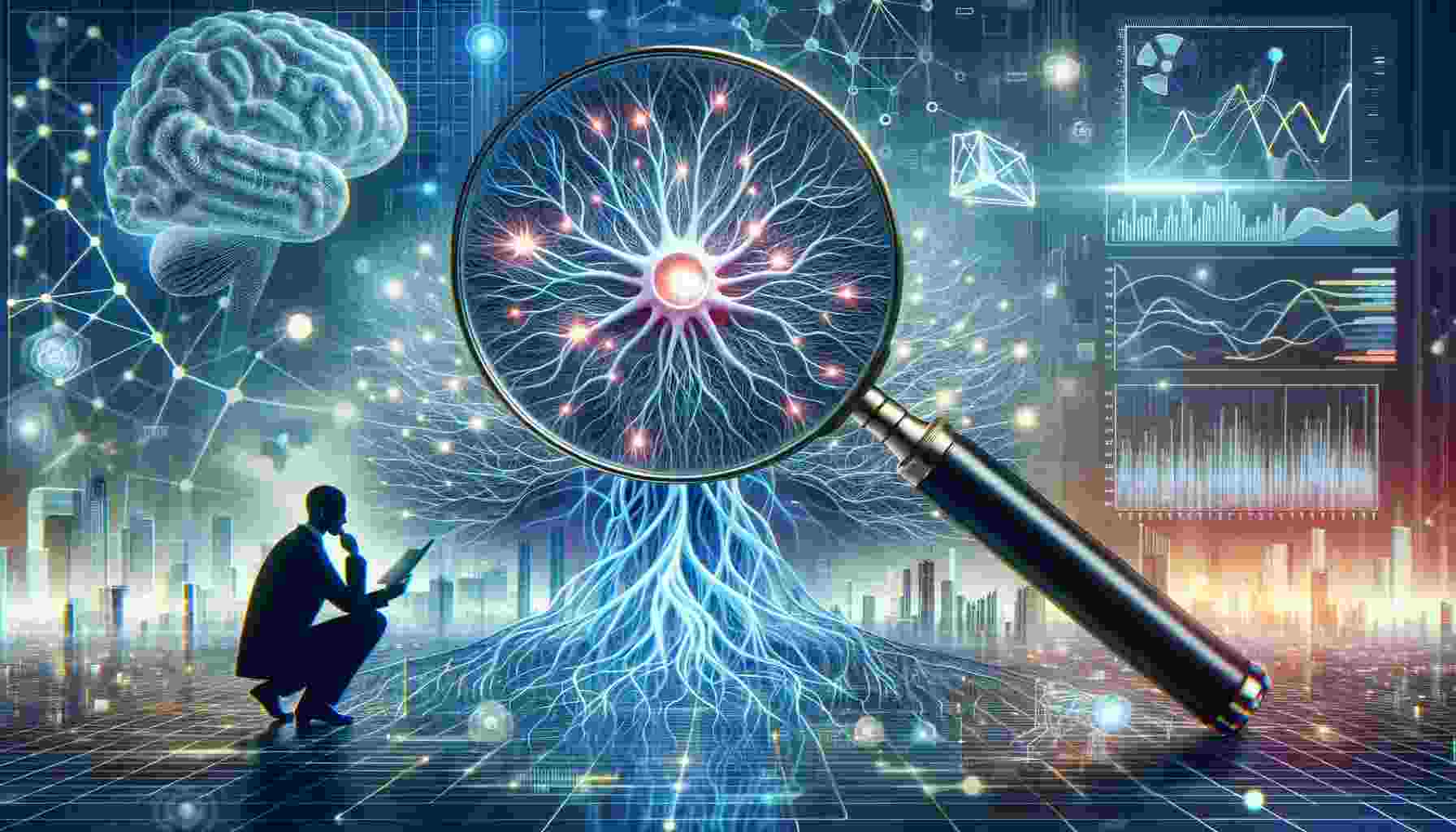

At its core, a neural network is an AI model designed to simulate the way human brains operate. These networks consist of layers of interconnected nodes or neurons, which work in unison to interpret, process, and output data.

This ability to process complex datasets and recognize patterns makes neural networks a pivotal element in AI. Looking to learn more about neural networks? Read this article written by the AI specialists at All About AI.

The Evolution of Neural Networks: A Historical Perspective

The journey of neural networks began in the 1950s with the development of simple models known as perceptrons.

Over the decades, as computing power surged and AI research deepened, neural networks transformed into more sophisticated architectures, capable of handling intricate tasks with greater efficiency and accuracy.

The Beginnings: Perceptrons and Early Models

The concept of neural networks can be traced back to the 1940s and 1950s, with the introduction of the perceptron by Frank Rosenblatt. This early model, although simplistic, laid the groundwork for understanding how networks of artificial neurons could learn.

The AI Winter and Resurgence

During the 1970s and 1980s, the field of neural networks experienced a decline, known as the AI winter, due to limited computational resources and disillusionment with AI’s progress. However, in the late 1980s, the development of the backpropagation algorithm revived interest in neural networks, showcasing their learning capabilities.

The Rise of Deep Learning

The 21st century marked the era of deep learning, where neural networks with many layers (deep neural networks) began to be used widely, fueled by increased computational power and large amounts of data. This era saw breakthroughs in areas like computer vision and natural language processing.

How Do Neural Networks Function?

Neural networks operate by processing data through multiple layers of nodes, each responsible for recognizing different patterns and features.

Understanding the Architecture

Neural networks consist of layers of interconnected nodes (neurons), each resembling a basic processing unit. The structure typically includes an input layer, one or more hidden layers, and an output layer.

The Role of Weights and Activation Functions

Each neuron in a neural network is assigned a weight. Activation functions within neurons then determine whether the neuron should be activated or not, influencing the network’s output.

How Neural Networks Learn and Evolve

Here’s a look at how neural networks evolve.

Step 1: Initialization

The learning process begins with the initialization of weights and biases, typically set randomly. This step sets the stage for the network to start processing data.

Step 2: Forward Propagation

Data enters the neural network and is processed sequentially through each layer, from input to output. During this stage, the network makes initial predictions based on its current weights.

Step 3: Backpropagation

After making a prediction, the network compares it against the actual expected output, computing the error. The network then uses this error to adjust its weights in reverse order, a process known as backpropagation.

Step 4: Iterative Optimization

This entire process is iterative. The network repeatedly processes the data, improving its predictions by minimizing the error in each iteration, thereby enhancing its accuracy and reliability over time.

What are the Different Types of Neural Networks?

There are several types of neural networks, each designed for specific tasks and data types. These include:

Feedforward Neural Networks (FNN)

These are the simplest type of neural networks, characterized by a unidirectional flow of data from the input to the output layer.

Recurrent Neural Networks (RNN)

RNNs are designed for processing sequential data, featuring loops in their architecture to allow information to persist over time, making them ideal for tasks like language modeling.

Convolutional Neural Networks (CNN)

Convolutional Neural Networks are predominantly used in image processing. They utilize convolutional layers to efficiently identify and interpret visual patterns in data.

Autoencoders

These networks are used for unsupervised learning tasks, such as data compression and reconstruction, through an encoder-decoder structure.

Generative Adversarial Networks (GAN)

GANs consist of two networks – a generator and a discriminator – working in tandem to generate new data samples that are similar to a given training set.

Where are Neural Networks Applied?

Neural networks find applications in a multitude of fields, including:

Healthcare: Diagnostics and Research

In healthcare, neural networks are employed for tasks such as disease detection through medical imaging, drug development research, and personalizing treatment plans based on individual patient data.

Finance: Fraud Detection and Risk Management

In the financial sector, neural networks analyze vast transactional datasets to detect fraudulent activities and assess risks in lending and investment decisions.

Automotive: Autonomous Vehicles

Neural networks are integral in developing autonomous vehicles, helping them to interpret sensory data, navigate complex environments, and make real-time decisions.

Robotics: Automation and Control

These networks are utilized in robotics for controlling robotic systems, enhancing precision in manufacturing, and improving efficiency in various automated tasks.

Entertainment: Content Recommendation

Streaming services leverage neural networks for analyzing user preferences and behaviors, enabling personalized content recommendations to enhance user experience.

What Advantages Do Neural Networks Offer?

The primary advantages of neural networks in AI include their ability to handle large and complex datasets, adapt and learn from new data, and identify patterns that might be invisible to human analysts.

Here’s a look at some of the advantages of neural networks.

- Pattern Recognition: Neural networks excel at identifying complex patterns in data, making them ideal for tasks like image and speech recognition.

- Data Processing Speed: These networks can process large volumes of data with high efficiency, enabling rapid analysis and decision-making.

- Adaptability: Neural networks have the unique ability to learn from new data, adapting their models to improve accuracy over time.

- Fault Tolerance: They are robust systems; even partial damage to a network doesn’t completely halt its functionality, ensuring reliability.

- Decision-Making Capability: Neural networks are effective in making accurate predictions and decisions, essential in fields like healthcare and finance.

Understanding the Limitations of Neural Networks

Despite their strengths, neural networks have limitations. They require substantial amounts of data for training, can become overly fitted to their training data (overfitting), and often lack transparency in their decision-making processes (a challenge in explainable AI).

- Data Dependency: These networks require extensive datasets for training, making them less effective with limited or biased data.

- Overfitting: There’s a risk of overfitting, where networks perform exceptionally on training data but poorly on unseen data.

- Transparency: Often criticized as “black boxes,” neural networks can lack interpretability in their decision-making processes.

- Computational Intensity: The training and operation of neural networks demand significant computational resources, posing challenges in terms of energy and hardware.

- Vulnerability to Bias: Neural networks can inherit and amplify biases present in their training data, leading to skewed or unfair outcomes.

The Future of Neural Networks in AI

The future of neural networks in AI looks promising, with ongoing research focused on making them more efficient, interpretable, and versatile. As these models become more advanced, they are expected to play a pivotal role in furthering AI integration across various sectors.

Enhanced Interpretability

Future trends indicate efforts to make neural networks more transparent and interpretable, aiding in understanding how these models arrive at their conclusions.

Integration with Quantum Computing

The integration of neural networks with quantum computing promises groundbreaking advancements in computational efficiency and problem-solving capabilities.

Advancements in Unsupervised Learning

A significant focus is being placed on developing neural networks that require minimal supervision, enhancing their capability to learn and adapt independently.

Cross-Disciplinary Applications

Expect to see neural networks being increasingly applied in diverse fields such as environmental science, psychology, and humanities, broadening their impact.

Ethical AI and Bias Reduction

Efforts are underway to develop more ethical AI systems, with a specific focus on reducing biases in neural networks, ensuring fairer and more equitable outcomes. Begin your exploration of the artificial intelligence universe with our meticulously crafted glossaries. Whether you’re a newcomer or an advanced student, there’s always something new to uncover!

Want to Read More? Explore These AI Glossaries!

FAQs

What is a Neural Network in Simple Words?

What is Neural Network vs AI?

Is a Neural Network an Algorithm?

What is the Difference Between Machine Learning and Neural Networks?

Conclusion

Neural networks are more than just a technological innovation; they are a testament to the progress in AI, offering both immense opportunities and challenges. As these networks continue to evolve, they will undoubtedly shape the future of technology and its integration into everyday life, paving the way for more intelligent and efficient systems.

This article was written to answer the question, “what is a neural network.” Here, we’ve discussed the use of neural networks, as well as their potential limitations and future trends. If you’re looking to learn more about other AI key terms, check out the articles in our AI Lexicon.